Fact check: AI doctors on social media spreading fake claims

Videos of AI-generated doctors giving health and beauty tips on social media are becoming hugely popular, generating millions of clicks. How accurate are their claims? And how dangerous is AI in the medical field?

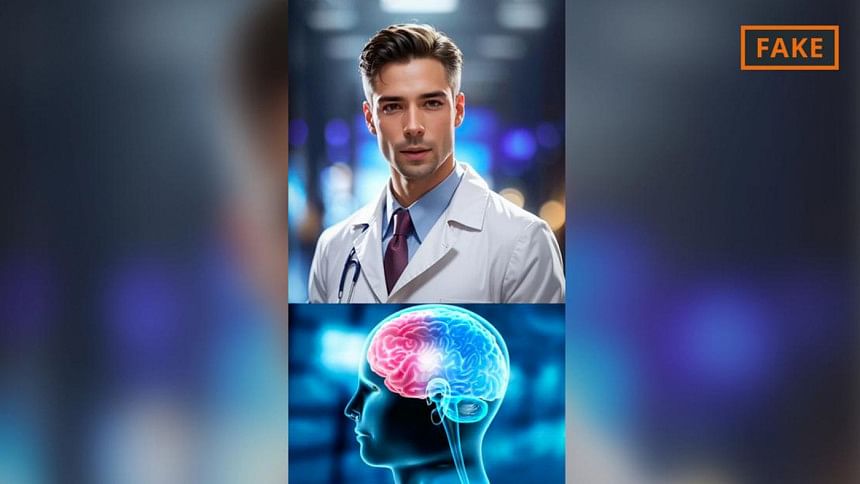

In videos posted on social media, apparent doctors wearing white lab coats and a stethoscope around their necks frequently appear giving advice about natural remedies or tips on whitening teeth. But in many cases, these aren't real doctors, but bots generated by artificial intelligence (AI) sharing medical advice with hundreds of thousands of followers. Not everything they say is true.

Can chia seeds help get a handle on diabetes?

Claim: One AI-generated bot on Facebook claimed that "Chia seeds can help get diabetes under control." The video garnered over 40,000 likes, was shared more than 18,000 times, and generated over 2.1 million clicks.

DW fact check: False.

Chia seeds are trendy, thanks to the many positive active agents they contain. They contain unsaturated fatty acids, dietary fiber, essential amino acids, and vitamins. A 2021 report from the US found that chia seeds had a positive effect on consumers' health. Participants with Type 2 diabetes and hypertension were found to have far lower blood pressure after ingesting a certain amount of chia seeds over several weeks. Another study from this year confirmed that chia seeds had antidiabetic and anti-inflammatory properties, among other things.

So, chia seeds can have positive health effects for people struggling with diabetes. "But nobody said anything about a cure," Andreas Fritsche, a diabetologist at Tübingen University Hospital, told DW. He explained that there was no scientific evidence that chia seeds could cure diabetes or help get it completely under control.

But the video not only spread fake information, it also portrayed a phony doctor. Social media abounds with such false doctors sharing supposed hacks for good health. Sometimes, artificially generated "doctors" also share beauty tips with household remedies that supposedly make teeth whiter or stimulate beard growth.

Many of these videos are in Hindi, even if most bear English titles in their usernames. A 2021 Canadian study found that India had become a hotspot for false information on health issues during the Covid-19 pandemic.

Data shows that India had an even higher circulation of disinformation concerning the pandemic than countries like the US, Brazil or Spain. The study argued this could be due to India's higher internet penetration rate, increasing social media consumption, and — in some cases — users' lower digital competence.

AI-generated doctors usually appear trustworthy, with white coats and a stethoscope around their necks or dressed in scrubs.

AI impersonating a doctor can be very misleading, as Stephen Gilbert, professor of Medical Device Regulatory Science at the Dresden University of Technology, explained. His field of research includes medical software based on artificial intelligence. "It's about conveying the authority of a doctor, who usually plays an authoritative role in almost all societies," he told DW. This includes prescribing medication, diagnosing, or even deciding if someone is alive or dead. "These are cases of intentional misrepresentations for certain purposes."

According to Gilbert, these purposes can include selling a specific product or service, such as a future medical consultation.

Can household remedies heal brain diseases?

Claim: On Instagram, one artificially generated doctor claimed in a video that "Grinding seven almonds, 10 grams of sugar candy, and 10 grams of fennel, taken over 40 days every evening with hot milk will heal any kind of brain disease." The account has over 200,000 followers, and the video was watched over 86,000 times.

DW fact check: False.

This video claims to offer concrete steps to cure all brain diseases. But the claim is utterly false, as Frank Erbguth, president of the German Brain Foundation, confirmed to DW.

He explained that there was no evidence that this recipe had any beneficial effects on brain diseases. An online search for this particular ingredient combination yielded no results. The German Brain Foundation advises people to immediately seek medical help if they experience symptoms such as signs of paralysis or impaired speech.

As is the case with so many other videos from similar accounts, this video also depicts an AI-generated figure dressed in a white lab coat. Although Gilbert explained that these videos are easy to recognize as fake, thanks to the static image of the purported doctor that employs very minimal facial expression and only moves its mouth, he also warned of the negative impact artificial intelligence could have on the medical field in the future.

"The highly concerning area that this will almost certainly move into will be to link all these processes together so that real-time responses are produced that are generated by the AI and linked to the generative audio AI of the response," he said.

Aside from manipulating videos, artificial intelligence also poses risks in other ways, such as deep fakes in medical diagnostics. In 2019, researchers working on an Israeli study managed to produce CT scans containing false images, thus showing that tumors could be added to or removed from images of CT scans.

A study from 2021 achieved similar results when it produced realistic and convincing electrocardiograms depicting a fake heartbeat.

And chatbots pose another risk because their answers can sound reasonable, even when incorrect. "People ask questions, and [the bots] appear highly knowledgeable. That is a very dangerous scenario for patients," Gilbert said.

Yet, despite the risks, AI has played an increasingly prominent role in medicine in recent years. It can help doctors analyze X-ray and ultrasound images and offer support in making diagnoses or providing treatment.

"In many countries, medical consultation is very expensive or not accessible at all," said Gilbert. "So, for many people in many countries, having access to information could be a significant advantage."

But the question remains whether the information provided is still reliable and correct. To check this, users searching online for information about symptoms could watch videos by certified operators or, if possible, read scientific studies to cross-check information.

Gilbert added that it was a good idea to research which medical team was behind a particular website, app, or account. In cases where this was not evident, it was best to remain skeptical and assume the source was untrustworthy, he concluded.

For all latest news, follow The Daily Star's Google News channel.

For all latest news, follow The Daily Star's Google News channel.

Comments